This blog post was recognised on this podcast and here’s the audio snippet (below) where they specifically talked about it. Thanks guys!

Microsoft has the second biggest network in the world behind the internet

Microsoft Cloud Show Podcast – Episode 281

Episode 281

Want to understand more about Azure’s global WAN and Microsoft’s network backbone?

A recent 2018 Public Cloud Performance Benchmark Report from ThousandEyes says Azure has the best global WAN, out of the three major public cloud providers. – all of it is our own private network.

Some of the highlights in this report are:

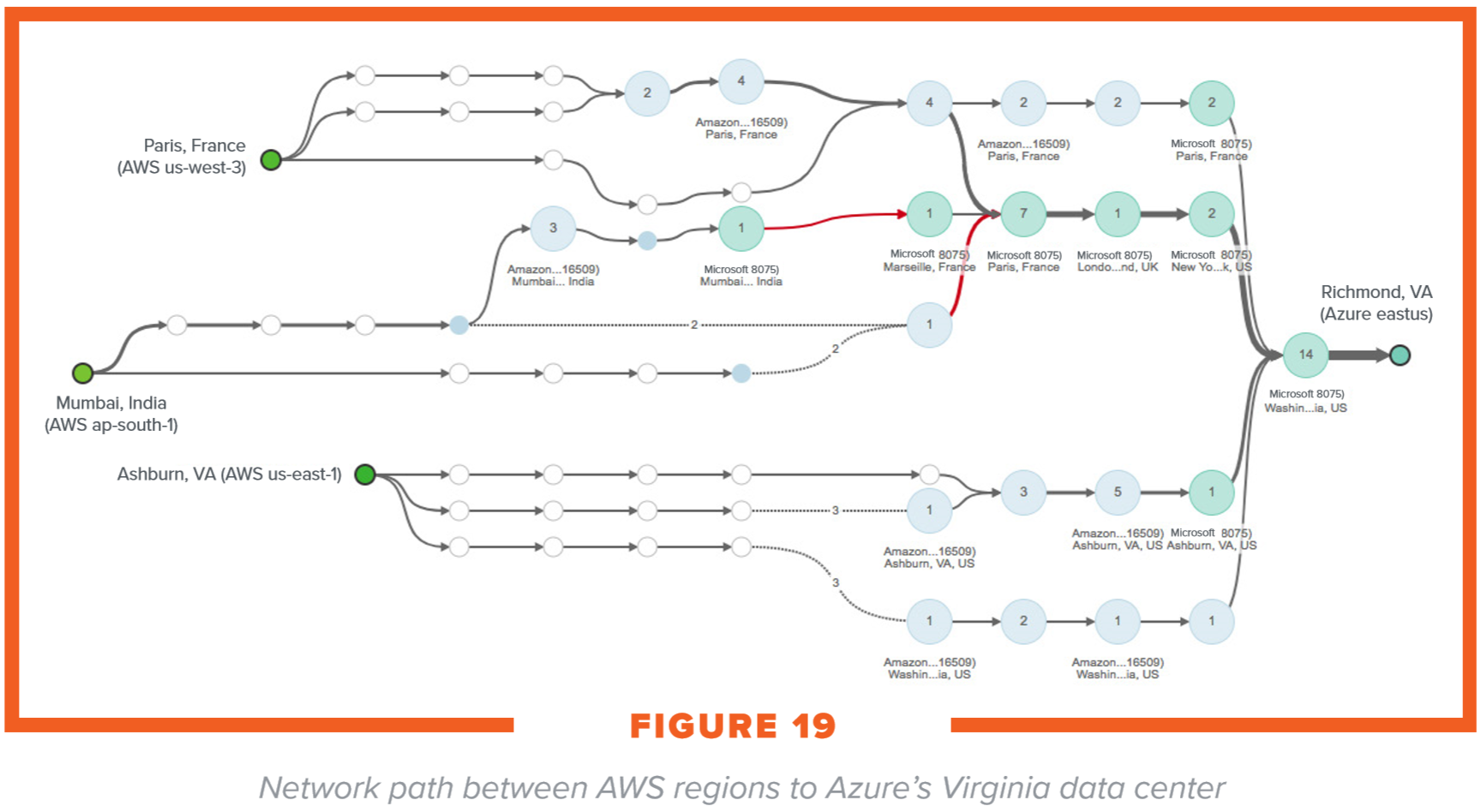

- AWS’ network design forces traffic from the end user through the public Internet, only to enter the AWS backbone closest to the target region

- AWS architects its network to push traffic away from it as soon as possible

- A possible explanation for this behavior is the high likelihood that the AWS backbone is the same as that used for amazon.com, so network design ensures that the common backbone is not overloaded

- AWS, the current market leader in public cloud offerings, focused initially on rapid delivery of services to the market, rather than building out a massive backbone network

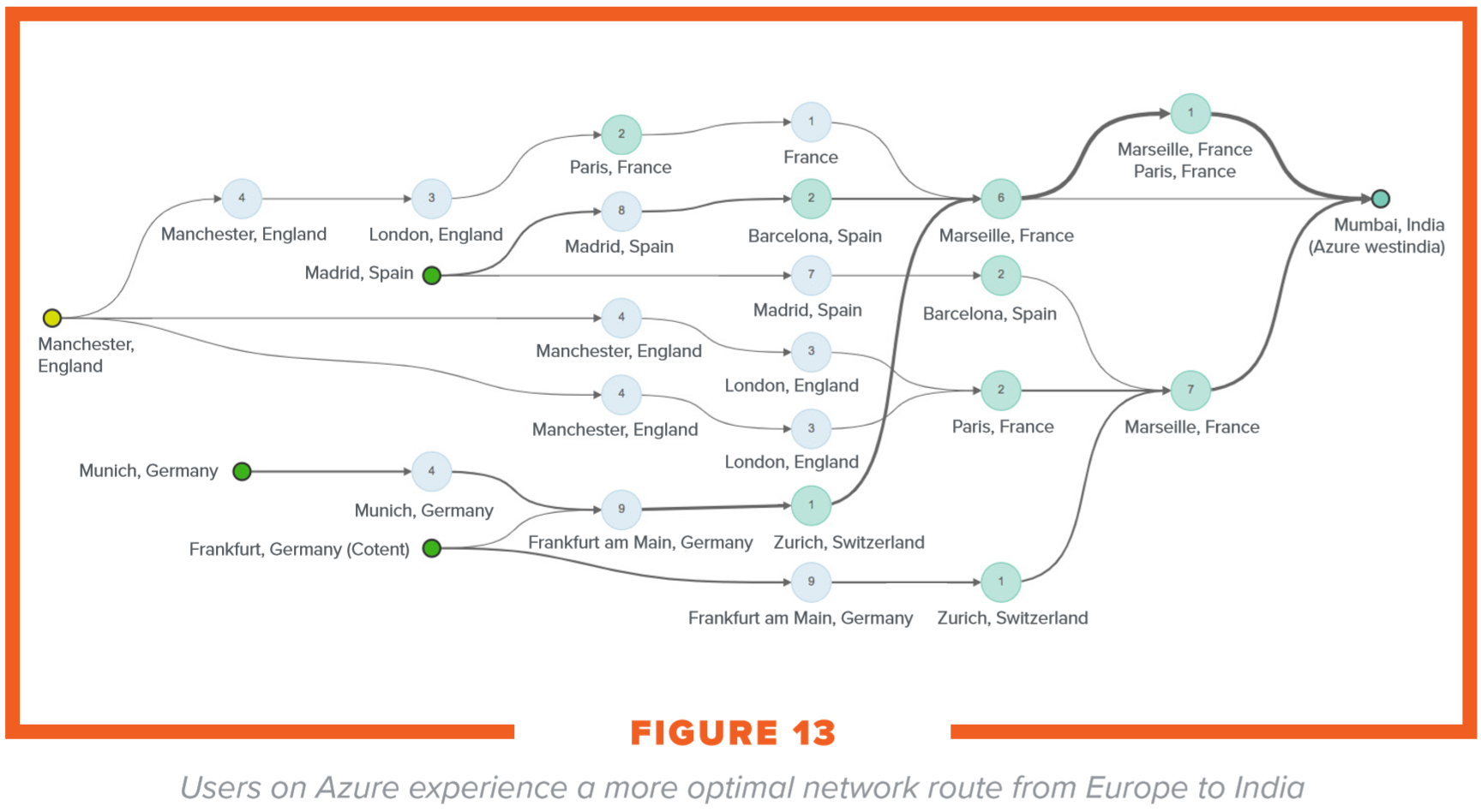

- GCP users connecting to data centers located in India from Europe experience approximately three times the network latency (359 ms) when compared to AWS and Azure (128 ms)

- GCP relies heavily on its internal backbone to move traffic globally. The lack of direct backbone connectivity from Europe to India in the Google Cloud network results in traffic taking a circuitous route (Figure 12) through the United States to reach India. This results in much higher network latency, with the potential to affect users connecting to workloads in GCP Mumbai. Azure, on the other hand finds an optimal route to India (Figure 13), translating into a better user experience

What does all this mean? Microsoft seems to come out on top in this report. Most of which is all to do with our massively fast WAN links with speeds up to 172Tbps and our POPs (Points of Presence). These are edge sites that are strategically placed around our datacenter regions to help bring the traffic back in – aka. the last mile, where we bring the datacenter one step closer to the customer.

Microsoft has been doing this for a long time with Office 365. About 3 years ago, Microsoft started to recommend that people connect to Office 365 over the public internet in the first instance and not use ExpressRoute. Why? It’s all to do with the way we bring our services closer to the user, the last mile so users can optimally connect back into Microsoft’s network.

If you have a look on the public PeeringDB website, you can see Microsoft’s Private peering POPs. Below showing Microsoft POPs in Australia.

Depending on the ISP you are using for your regular internet connection, ISPs normally hand off Microsoft public traffic to the local Microsoft POPs. Imagine you are sitting in an internet cafe in Perth, you open up your browser (Chrome/Edge/Firefox) and you access http://outlook.office.com, magically, the traffic should be handed off to the Microsoft POP in Perth, then the traffic is handled by Microsoft’s backbone in order to give you the best experience as the traffic is carried back to the Microsoft datacenter over Microsoft’s backbone.

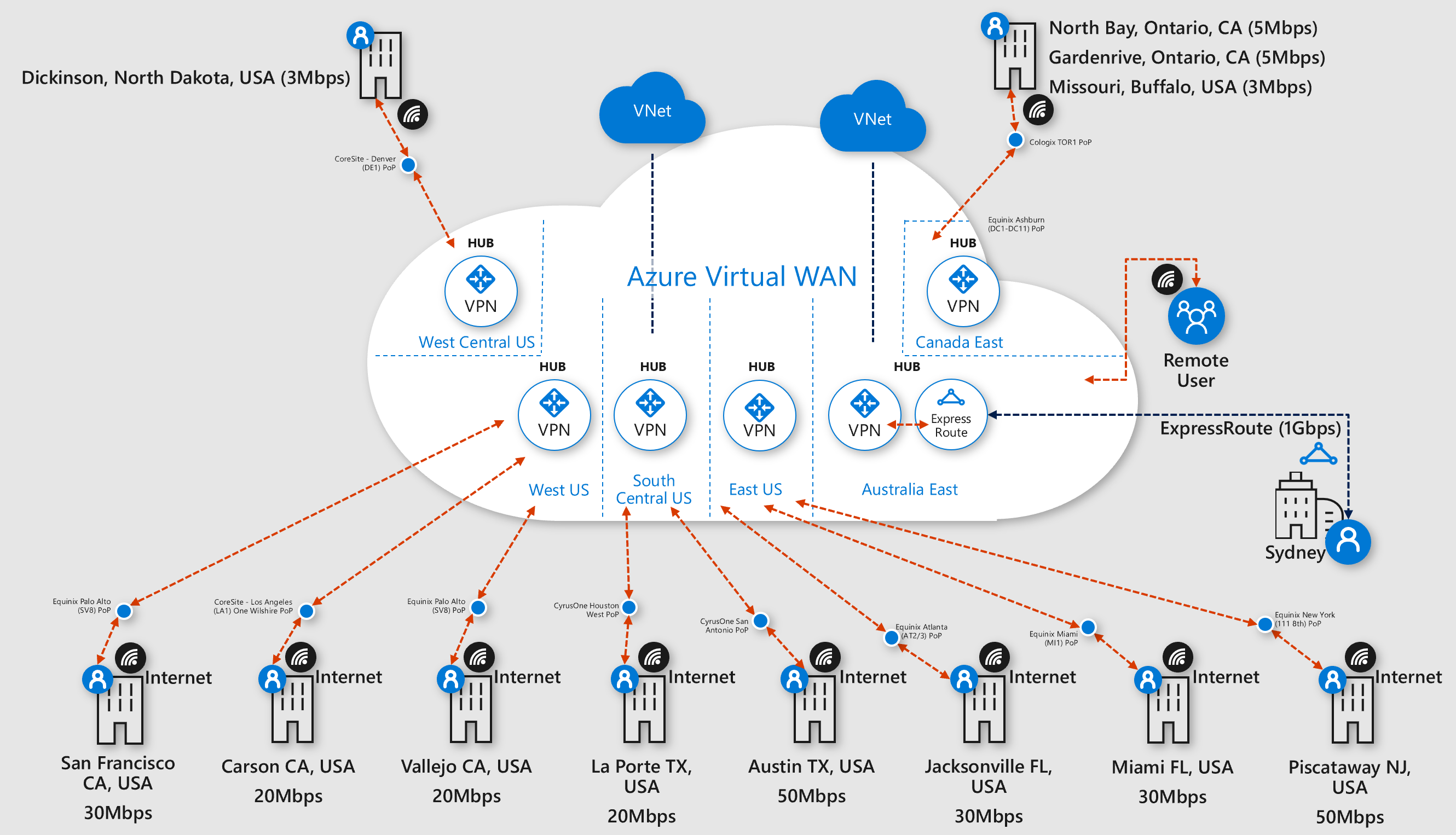

This is similar to how Azure Virtual WAN works, as each Azure Virtual WAN consists of one Virtual Hub per Azure region, multiple branch sites can hang off a specific Virtual WAN hub. However for the branch site to connect to the hub, it needs to connect over the public internet.

The diagram below shows sample branch sites as green, our Pops in Australia as red and the Azure Australia regions showing as the Azure logo.

Azure Virtual WAN

Is your network expanding and cost increasing? Do you have or planning to have MPLS and are considering internet connectivity options to lower cost? Virtual WAN gives you a hybrid connectivity approach that helps simplify your network.

Azure Virtual WAN provides sort of two solutions – it can be thought of as an MPLS alternative and an efficient way for all branch sites to connect to Azure with the benefit being you don’t need to stand up a VPN gateway in Azure saving $$$.

From the map above:

- An SD-WAN appliance at each branch site would connect to the Virtual Hub via the closest POP, from the POP, traffic is carried across Microsoft’s backbone.

- As an example, if a site in Cairns wanted to connect to a site in Perth, traffic would go via our backbone between Brisbane & Perth.

- Long haul traffic can use our backbone, short haul traffic can go direct

- Long haul traffic, if a site in Cairns wanted to connect to a site in Perth, traffic would go via Microsoft’s backbone between Brisbane & Perth

- Short haul traffic, if two sites in Cairns wanted to talk to each other, this can be configured to go direct using the SD-WAN appliance itself

- All branch sites will have the best connectivity back to Azure vNets, the best path back to the Azure datacenters

Here’s an example of a customer’s network of branch sites in the US making use of Azure Virtual WAN, connecting to the local Virtual Hub in the closest region while utilising the even closer POP to help bring the traffic to the Azure region the most efficient way.

The small blue dots at the bottom are our POP locations. There is on Azure Virtual WAN (global) which sites across multiple Azure regions with one Virtual Hub per region.

Here’s a map of all Azure’s datacenters (except government) and Microsoft’s POP locations – and below shows this same map zoomed out.

The Azure logos indicate Azure datacenters, the Red icons indicate Microsoft POPs.

To give you an idea of the massive investments and work we are doing on our global networks, from this video (cued to the exact position)… Albert Greenberg, CVP Azure Networking talks about: Microsoft’s networking history, we didn’t originally have the interconnects between zones but we put them in without anyone noticing, we did this massive migration of workload and essentially changed the wings, the engine and the cockpit of the plane while it was flown un-noticed.

In this YouTube video here (cued to the exact position) Albert talks about Azure Virtual WAN and the technology behind the scenes. We have ripped out the bottlenecks of processing multi-tenant networking at the host CPU level and we’re making use of FPGA cards (SmartNIC) to offload host networking to hardware – a solution to provide software-like programmability while providing hardware-like performance – to reduce backend CPU utilisation.

More on Azure Accelerated Networking: SmartNICs in the Public Cloud From here:

As a large public cloud provider, Azure has built its cloud network on host-based software-defined networking (SDN) technologies, using them to implement almost all virtual networking features, such as private virtual networks with customer supplied address spaces, scalable L4 load balancers, security groups and access control lists (ACLs), virtual routing tables, bandwidth metering, QoS, and more. These features are the responsibility of the host platform, which typically means software running in the

hypervisor.

The cost of providing these services continues to increase. In the span of only a few years, we increased networking speeds by 40x and more, from 1GbE to 40GbE+,

and added countless new features. And while we built increasingly well-tuned and efficient host SDN packet processing capabilities, running this stack in software on the

host requires additional CPU cycles. Burning CPUs for these services takes away from the processing power available to customer VMs, and increases the overall cost of providing cloud services.

Single Root I/O Virtualization (SR-IOV) [4, 5] has been proposed to reduce CPU utilization by allowing direct access to NIC hardware from the VM. However, this direct access would bypass the host SDN stack, making the NIC responsible for implementing all SDN policies. Since these policies change rapidly (weeks to months), we required a solution that could provide software-like programmability while providing hardware-like performance.

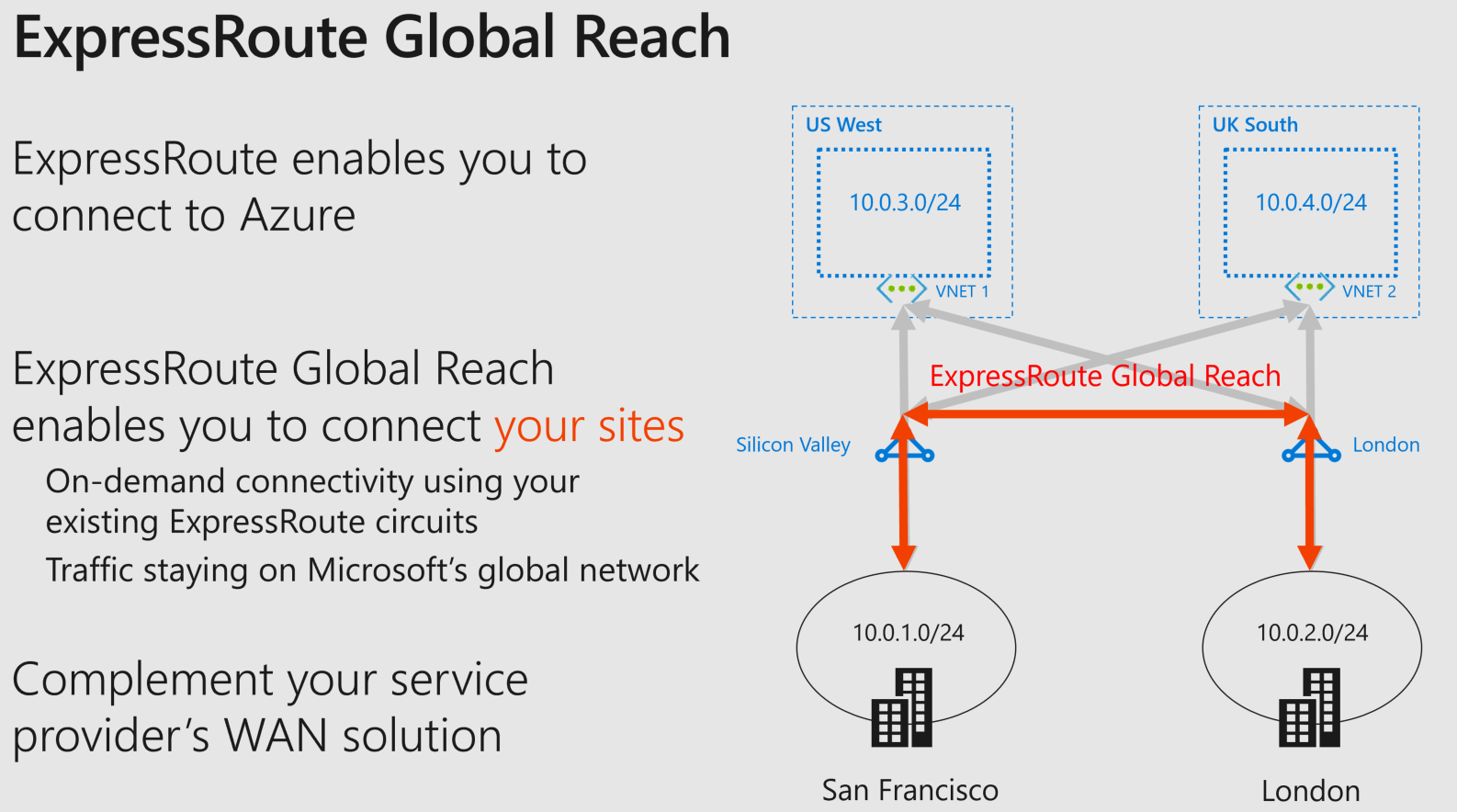

ExpressRoute Global Reach

Since we have a great global network, another way we’re enabling customers to make use of this great network, by allowing them to connect their datacenters together using our backbone across our edge.